Multi-Input & Multi-Output Architectures

Multi-Input

Dataset Class

class OmniglotDataset(Dataset):

def __init__(self, transform, samples):

# Assign transform and samples to class attributes

self.transform = transform

self.samples = samples

def __len__(self):

# Return number of samples

return len(self.samples)

def __getitem__(self, idx):

# Unpack the sample at index idx

img_path, alphabet, label = self.samples[idx]

img = Image.open(img_path).convert('L')

# Transform the image

img_transformed = self.transform(img)

return img_transformed, alphabet, label

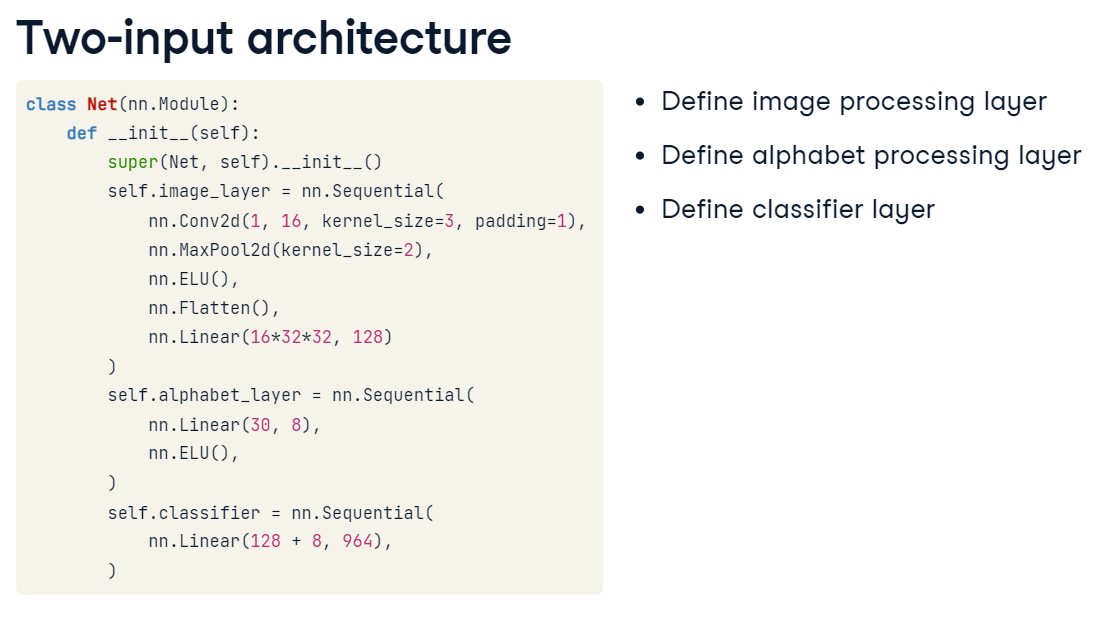

Two-input Architecture

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# Define sub-networks as sequential models

self.image_layer = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=2),

nn.ELU(),

nn.Flatten(),

nn.Linear(16*32*32, 128)

)

self.alphabet_layer = nn.Sequential(

nn.Linear(30, 8),

nn.ELU(),

)

self.classifier = nn.Sequential(

nn.Linear(128 + 8, 964),

)

def forward(self, x_image, x_alphabet):

# Pass the x_image and x_alphabet through appropriate layers

x_image = self.image_layer(x_image)

x_alphabet = self.alphabet_layer(x_alphabet)

# Concatenate x_image and x_alphabet

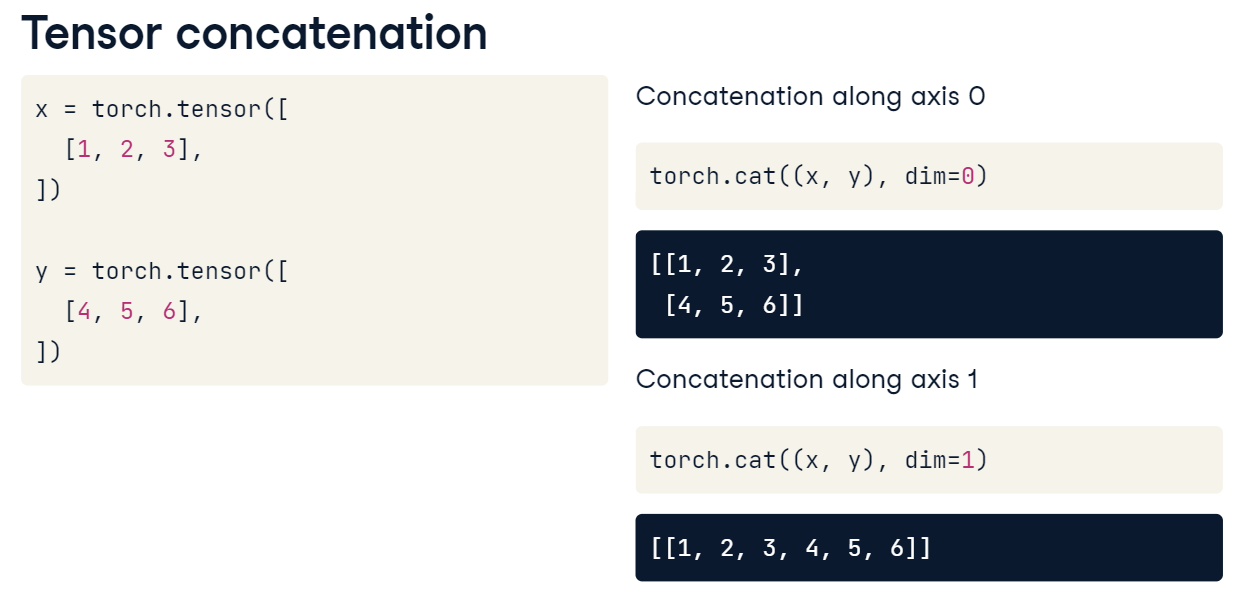

x = torch.cat((x_image, x_alphabet), dim=1)

return self.classifier(x)Multi-Output

# Create dataset_train

dataset_train = OmniglotDataset(

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Resize((64, 64)),

]),

samples=samples,

)

# Create dataloader_train

dataloader_train = DataLoader(

dataset_train, batch_size = 32, shuffle=True,

)

Two-output model Architecture

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.image_layer = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=3, padding=1),

nn.MaxPool2d(kernel_size=2),

nn.ELU(),

nn.Flatten(),

nn.Linear(16*32*32, 128)

)

# Define the two classifier layers

self.classifier_alpha = nn.Linear(128, 30)

self.classifier_char = nn.Linear(128, 964)

def forward(self, x):

x_image = self.image_layer(x)

# Pass x_image through the classifiers and return both results

output_alpha = self.classifier_alpha(x_image)

output_char = self.classifier_char(x_image)

return output_alpha, output_char

Training Multi-output model

net = Net()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.05)

for epoch in range(1):

for images, labels_alpha, labels_char in dataloader_train:

optimizer.zero_grad()

outputs_alpha, outputs_char = net(images)

# Compute alphabet classification loss

loss_alpha = criterion(outputs_alpha, labels_alpha)

# Compute character classification loss

loss_char = criterion(outputs_char, labels_char)

# Compute total loss

loss = loss_alpha + loss_char

loss.backward()

optimizer.step()

Multi-output model Evaluation

def evaluate_model(model):

# Define accuracy metrics

acc_alpha = Accuracy(task="multiclass", num_classes=30)

acc_char = Accuracy(task="multiclass", num_classes=964)

model.eval()

with torch.no_grad():

for images, labels_alpha, labels_char in dataloader_test:

# Obtain model outputs

outputs_alpha, outputs_char = model(images)

_, pred_alpha = torch.max(outputs_alpha, 1)

_, pred_char = torch.max(outputs_char, 1)

# Update both accuracy metrics

acc_alpha(pred_alpha, labels_alpha)

acc_char(pred_char, labels_char)

print(f"Alphabet: {acc_alpha.compute()}")

print(f"Character: {acc_char.compute()}")

loss들 결합할 때는 표준화를 해 주어야 합니다

안그러면 어떤거는 숫자가 엄청 큰데, 다른건 상대적으로 작게 로스가 찍혀서, 가중치가 이상하게 부여될 수 있음