Images & Convolutional Neural Networks

Data Augmentation

image data는 다른 데이터들에 비해서 개수가 부족한데, 인위적으로 flip, rotate 등을 통해 augmentataion을 줄 수 있다.

CNN(Convolutional Neural Network)

요런 필터가 이미지를 돌아다니면서 픽셀별로 정보를 수집함

입력의 공간 패턴을 보존하고, nn.Linear()보다 적은 parameter을 사용함

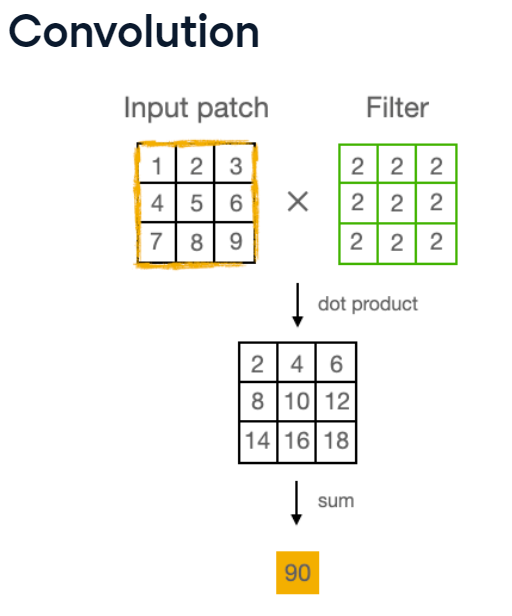

합성곱(Convolution)의 과정 : input patch와 Filter을 element-wise dot product를 해서 sum

Padding 추가를 통해 공간 차원 보존, 테두리 영역 보존 등의 역할 수행. nn.Conv2d()에 parameter padding=n으로 부여

class Net(nn.Module):

def __init__(self, num_classes):

super().__init__()

# Define feature extractor, 보통 2번 수행

self.feature_extractor = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(32, 64, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

)

# Define classifier

self.classifier = nn.Linear(64*16*16, num_classes)

def forward(self, x):

# Pass input through feature extractor and classifier

x = self.feature_extractor(x)

x = self.classifier(x)

return x

Image Classifier Training loop

# Define the model

net = Net(num_classes=7)

# Define the loss function

criterion = nn.CrossEntropyLoss()

# Define the optimizer

optimizer = optim.Adam(net.parameters(), lr=0.001)

for epoch in range(3):

running_loss = 0.0

# Iterate over training batches

for images, labels in dataloader_train:

optimizer.zero_grad()

outputs = net(images)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

epoch_loss = running_loss / len(dataloader_train)

print(f"Epoch {epoch+1}, Loss: {epoch_loss:.4f}")Precision & Recall

Precision : positive로 예측한 것들 중 실제로 positive인 것들

= Fraction of correct positive predictions

Recall : 맞게 예측한 것들 중 positive인 것들

= Fraction of all positive examples correctly predicted

각 클래스별로 개별적으로 분석하는 방법

Macro average

Micro average

Weighted average

Multi-class model evaluation

# Define metrics

metric_precision = Precision(task="multiclass", num_classes=7, average="micro")

metric_recall = Recall(task="multiclass", num_classes=7, average="micro")

net.eval()

with torch.no_grad():

for images, labels in dataloader_test:

outputs = net(images)

_, preds = torch.max(outputs, 1)

metric_precision(preds, labels)

metric_recall(preds, labels)

precision = metric_precision.compute()

recall = metric_recall.compute()

print(f"Precision: {precision}")

print(f"Recall: {recall}")

Analyzing metrics per class

# Define precision metric

metric_precision = Precision(

task="multiclass", num_classes=7, average=None

)

net.eval()

with torch.no_grad():

for images, labels in dataloader_test:

outputs = net(images)

_, preds = torch.max(outputs, 1)

metric_precision(preds, labels)

precision = metric_precision.compute()

# Get precision per class

precision_per_class = {

k: precision[v].item()

for k, v

in dataset_test.class_to_idx.items()

}

print(precision_per_class)